A few days ago I returned home after a lovely trip to Toulouse, where I attended the annual useR-conference, which is the largest or second largest R-conference in the world with more than 1000 participants (rstudio::conf seems to be about the same size).

In this blog post, I will recap what I have experienced and learned during the trip.

Day 0 - The curse strikes again

First, we need to start with some back-story: I have been to France the last two years on cycling camps, and both years there were issues with the flights to France. The first year I was thrown off the plain by KLM due to overbooking, and then they also lost all my luggage. Amazingly, it took them 2 days to return the luggage, leaving me lost in the Pyrenees without e.g. asthma medication and cycling equipment. The following year my plain was delayed by a few hours, resulting in me missing the transfer flight and eventually being about 16 hours delayed.

Consequently, I was a bit nervous when I arrived at the airport, worried that this “curse” might strike again. As you might have guessed by now, it certainly did. Shortly after my arrival they announced that my plain was 1 hour delayed, then 2 hours, then 3…Hence, my transfer flight become impossible to reach, resulting in me having to spend the night at a really strange hotel near Amsterdam airport.

Day 1 - Tutorial(s)

Charmingly, I was given a 6:50-flight to Paris the following morning, and after 3 hours of sleep, tons of coffee and yet another transfer, I eventually reached a rainy Toulouse at about 11:00.

This delay meant that I unfortunately missed the first workshop, where Hadley Wickham, Jenny Bryan and Jim Hester gave a lesson on package development. Apparently it was pretty interesting, but I wouldn’t know.

For the day’s second workshop, we had chosen the “Docker for Data Science” course held by Tobias Verbeke. Docker is a tool with which I already had some prior experience, as we have used it to successfully deploy several R-models in the past. Nonetheless, it was interesting to see how the guys at Open Analytics were utilizing this amazing tool.

Unfortunately for me, I was pretty exhausted from the long travel, and when the tutorial become quite technical towards the end it was getting difficult to follow. Personally, I think this course would have benefited from having some more exercises to solve, at least that would have made it easier for me to stay awake!

My collegue and I, excited for the upcoming conference (and slightly tired from a long trip)

My collegue and I, excited for the upcoming conference (and slightly tired from a long trip)

Day 2 - First day of talks

After 10 hours of much needed sleep in a hotel that was literally 10 meters away from the conference center, I was more than ready to embark upon the first day of talks.

The day’s first keynote was Julia “Squid” Lowndes, who talked about how they used R to do “better science in less time”. It was fascinating to hear about how R revolutionized their workflow and how their team dynamic worked, but I wish she went more into detail about the technical parts.

Next up were parallel sessions. It’s always annoyingly difficult to choose which subject to attend, as there’s always some interesting sessions running in parallel.

Here are some key takeaways of the day:

- “disk.frame” is a pretty cool package to deal with data that doesn’t fit into your computer’s RAM.

- There are lots of new cool functions in dplyr and tidyr, such as group_modify and unnest_auto.

- Francois (maintainer of dplyr) doesn’t appreciate smug comments (disguised as questions) from data.table-fanatics.

- ShinyProxy (https://www.shinyproxy.io/) seems like a useful open source tool for implementing Shiny-applications.

- I wish I had access to Hermione’s Time-turner necklace so I didn’t have to miss any important sessions.

The conference center was located next to a beautiful park

The conference center was located next to a beautiful park

Day 3 - More talks, space center and conference dinner

So the third day started of with a really interesting talk from the incredible Joe Cheng, who is the creator of the Shiny package in R. At this talk, he announced a new solution to make it possible to extract “normal R-code” from your Shiny code, using the family of “meta”-functions, e.g. metaReactive and metaRender. This seems like a brilliant solution to a very difficult and important problem, which I’m sure will be appreciated by anyone who is managing a complex Shiny application.

Afterwards there was another series of parallel sessions, and this time we had prepared a bit better regarding which talks to see. First, we went to the session on text mining, followed by a session on forecasting.

Key takeaways:

- The “polite”-package seems very handy for sensible web-scraping.

- Apparently you can use Deep learning to decide the gender of German nouns, but it struggles with the most commonly used words as there’s often basically no logic involved. Is it “der Nutella” or “die Nutella”? No one knows, at least not this neural network.

- The “tidyverts” collection of packages, created to assist you with all the primary steps of a time series problem, has progressed tremendously and include a lot of useful functions with a neat and tidy interface. I’ll probably do a blog post on this subject in the near future.

Before the conference dinner, I also had a brief chat with Hadley Wickham (chief data scientist at RStudio and creator of the tidyverse) about the future of the Apache Arrow project. I had promised my colleges I’d get a picture with him, so I had to be the awkward guy asking him for a selfie - but thankfully, he was really chill about it.

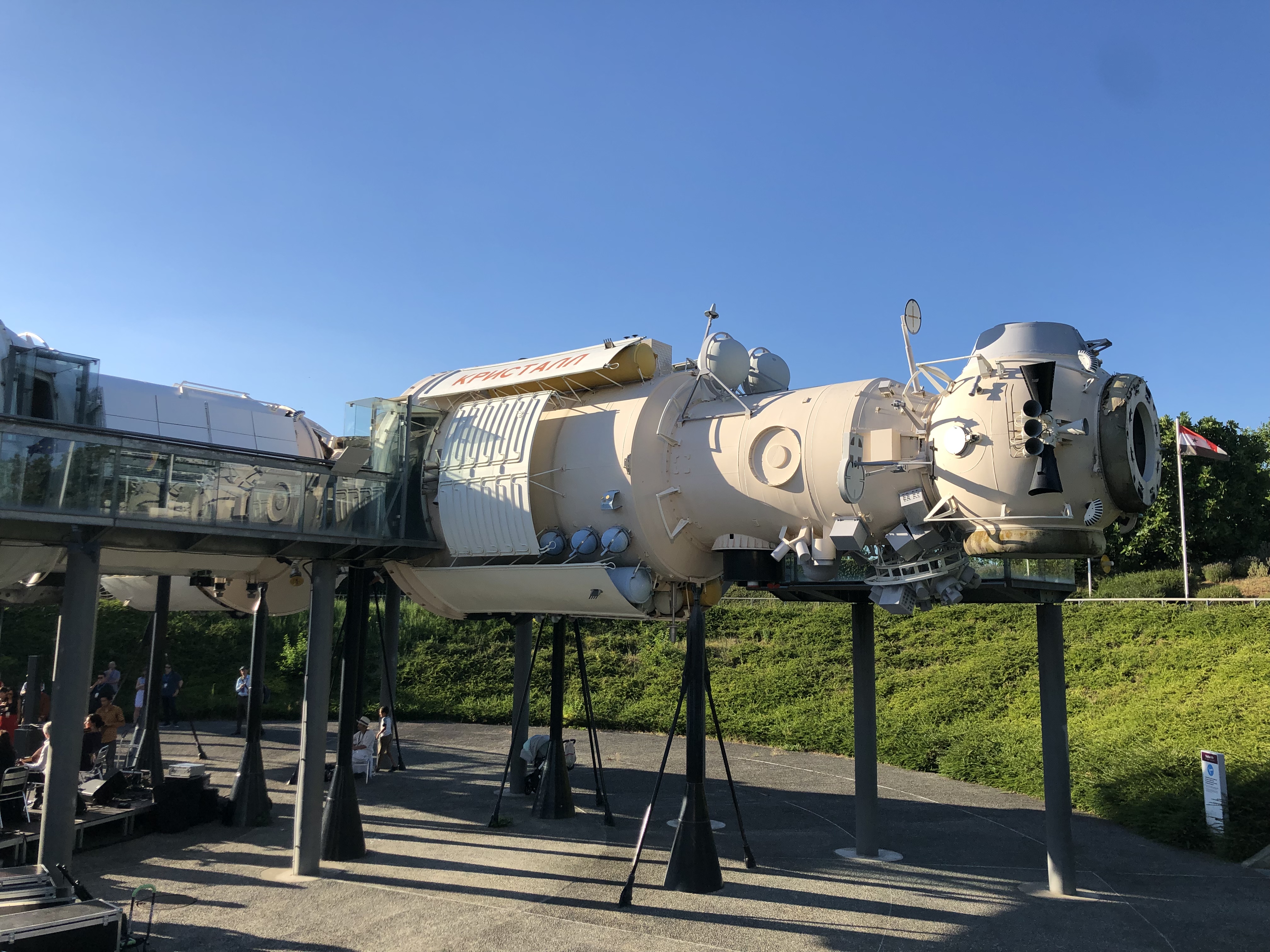

The conference dinner was held at the space center in Toulouse (Cité de l’espace). This was a great idea, as literally everyone I saw were walking around with a huge grin on their face while checking out all the cool stuff related to space travels and the moon landing. Some may say that certain parts of this museum were targeted towards kids, but on this day, we were all kids again.

An actual space ship. Woah!

An actual space ship. Woah!

Day 4 - final day

The last day in Toulouse started with a very technical keynote by Bettina Grün about clustering techniques in R (perhaps a bit too technical for the broader audience). The speaker mentioned a lot of packages one could use, but I wish she’d be more clear concerning which packages she actually recommended (I guess she was being diplomatic).

Then there was another round of parallel sessions, with the following key takeaways:

- The “Metaflow”-package created by Netflix seems to be a really useful tool to orchestrate ML-pipelines, but unfortunately it doesn’t appear to be open source quite yet. As far as I can tell, the R-package “Drake” seems like the closest OS alternative.

- Implementing ML models as API’s inside a Docker-container seems to be what everyone is doing nowadays. Thankfully, that’s what my team has been doing the last year as well.

- The “vroom”-package is ridiculously fast for reading data, particularly data containing strings (even faster than data.table::fread). This speed is achieved through brilliant utilization of the ALTREP framework, which was introduced in R 3.5.0.

- The “fastR”-project (https://github.com/oracle/fastr) seems like a handy tool for speeding up your R-code, particularly when using loops.

- The “future”-package will finally get progress bars. Yay!

Subsequently, we attended the first 30 minutes of the final keynote, “AI for good” by Julien Cornebise. This was very intriguing, so I regret missing the final part - but with my luck, we couldn’t take any risks with regards to catching the plane.

To my great surprise, the flight back home was undramatic, with delays of “only” 45 and 30 minutes per flight.

All in all, it was a great trip where I learned a lot and met numerous interesting people. So long, Toulouse!